Unleash Your Creative Power with DragGAN: Revolutionizing Image Manipulation Control

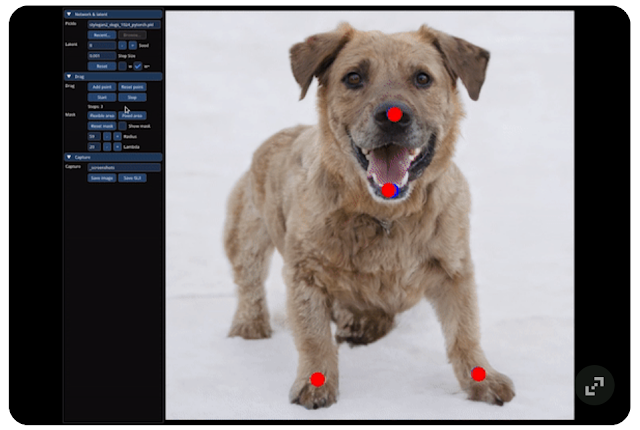

In the dynamic realm of image synthesis, achieving flexible and precise control over generated visual content is a coveted goal. Traditional approaches have relied on manual annotations or prior 3D models, but they often lack the flexibility, precision, and versatility desired by creators. However, a groundbreaking solution has emerged, introducing a whole new way to wield control over generative adversarial networks (GANs). Welcome to the era of DragGAN, where users can now interactively "drag" image points to seamlessly reach their intended destinations.

The DragGAN model, devised by ingenious researchers, comprises two crucial components. The first is feature-based motion supervision, propelling handle points towards their target positions with unrivaled accuracy, enabling pixel-perfect adjustments during image editing. Complementing this is a revolutionary point tracking approach that taps into the discriminative GAN features, ensuring continuous localization of handle point positions.

Enter the realm of DragGAN, and behold its transformative power. Now, anyone can effortlessly deform images with unprecedented control over pixel placement. Whether it's manipulating the pose, shape, expression, or layout of diverse categories like animals, cars, humans, or landscapes, DragGAN opens a realm of creative possibilities. What's more, as DragGAN operates within the learned generative image manifold of a GAN, it consistently delivers realistic outputs, even when confronted with daunting challenges such as hallucinating occluded content or deforming shapes while adhering to an object's rigidity.

No comments: