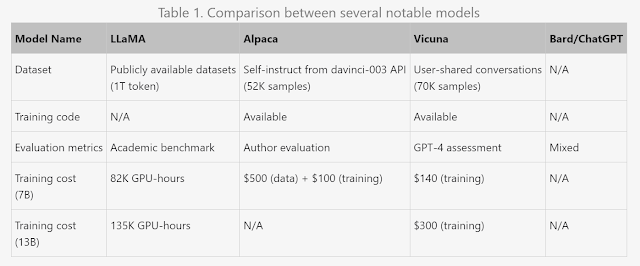

If you are interested in chatbots, you might have heard of LLaMA, a large language model that was fine-tuned on a variety of natural language understanding and generation tasks. LLaMA was released by Meta AI in February 2023, and it achieved state-of-the-art results on many benchmarks.

But what if you want to use LLaMA for conversational AI? How can you fine-tune it to make it more engaging, coherent, and human-like? That's where Vicuna comes in.

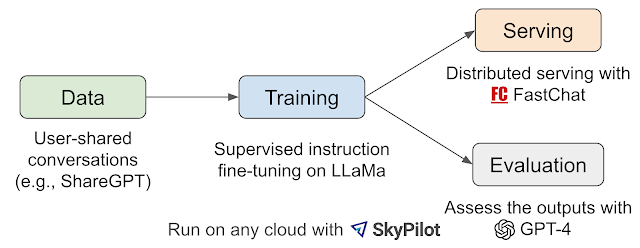

Vicuna is an open-source chatbot that was trained by further fine-tuning LLaMA on user-shared conversations collected from ShareGPT, a website where users can share their ChatGPT conversations. Vicuna uses the LLaMA-13B weights as the starting point, and it was trained with PyTorch FSDP on 8 A100 GPUs in one day. The code and weights, along with an online demo, are publicly available for non-commercial use. Vicuna aims to address the lack of training and architecture details in existing LLMs such as OpenAI's ChatGPT and Google's Bard.

Vicuna is not just another chatbot. It is a chatbot that can impress GPT-4, the latest and most powerful language model from OpenAI. The team behind Vicuna has run some tests using GPT-4 as a judge, and Vicuna-13B achieved more than 90%* quality of OpenAI ChatGPT and Google Bard while outperforming other models like LLaMA and Stanford Alpaca in more than 90%* of cases.

The evaluation was done using a mixture of human-generated and machine-generated conversations covering various topics and scenarios. The judge model was asked to rate each conversation on a scale of 1 to 5 based on coherence, relevance, fluency, and engagement.

How did they do it? The team made several improvements to the training recipe, including memory optimizations to enable Vicuna's understanding of long context, adjustment of the training loss to account for multi-round conversations, and utilization of gradient checkpointing and flash attention. They also built a serving system that can handle multiple models with distributed workers, and that can leverage cheaper spot instances from multiple clouds to reduce the serving costs.

Vicuna's Architecture

Vicuna is based on LLaMA-13B, which is a 13-billion-parameter language model that was fine-tuned on 18 natural language understanding and generation tasks using the Alpaca framework. LLaMA-13B has a transformer-based architecture with 48 layers, 128 attention heads, and a hidden size of 4096. It uses the GPT-2 tokenizer with a vocabulary size of 50K.

To make LLaMA more suitable for conversational AI, Vicuna further fine-tunes it on user-shared conversations collected from ShareGPT. ShareGPT is a website where users can share their ChatGPT conversations with others. The team collected about 70K conversations from ShareGPT, which cover a wide range of topics and styles.

The team used the training scripts provided by the developers of Alpaca and enhanced them to better handle multi-round conversations and long sequences. The training was done with PyTorch FSDP on 8 A100

GPUs in one day. The team also made several improvements to the training recipe, such as:

Memory optimizations: To enable Vicuna's understanding of long context, which can put a lot of memory pressure on the system, the team utilized gradient checkpointing and flash attention. Gradient checkpointing is a technique that saves memory by recomputing intermediate activations during the backward pass instead of storing them in memory. Flash attention is a technique that reduces memory consumption by using sparse attention matrices instead of dense ones.

Training loss adjustment: To account for multi-round conversations, the team computed the fine-tuning loss solely on the chatbot's output instead of the entire sequence. This way, the model can focus more on generating relevant and coherent responses rather than memorizing the input.

Data augmentation: To increase the diversity and robustness of Vicuna's responses, the team applied data augmentation techniques such as backtranslation and paraphrasing to some of the conversations in the training data.

Cost reduction via Spot Instance: With a 40x larger dataset and 4x sequence length for training, training expenses posed a considerable challenge. The team used AWS Spot Instances to reduce the cost by up to 90%.

The team also built a serving system that can serve multiple models with distributed workers.

How can I try Vicuna?

What can Vicuna do?

Vicuna can handle different types of conversations with ease. Here are some examples of what you can ask Vicuna to do:

Casual chat: You can have a friendly and engaging conversation with Vicuna about anything you like. For example, you can ask Vicuna about its hobbies, preferences, opinions, etc.

Math problems: You can ask Vicuna to solve simple or complex math problems for you. For example, you can ask Vicuna to calculate the area of a circle, the derivative of a function, or the factorial of a number.

Code generation: You can ask Vicuna to write code for you in various programming languages. For example, you can ask Vicuna to write a function that reverses a string in Python, Java, or C++.

Grammar correction: You can ask Vicuna to correct your grammar mistakes or improve your writing style. For example, you can ask Vicuna to rewrite a sentence in a more concise or polite way.

The introduction of Vicuna-13B highlights the growing potential of open-source chatbots in the field of artificial intelligence. The success of Vicuna-13B in outperforming larger and more expensive models like OpenAI ChatGPT and Google Bard is a testament to the effectiveness of community-driven efforts in developing cutting-edge technology.

No comments: