Revolutionizing Chatbots: Introducing WebLLM - A Chatbot That Runs Inside Your Browser

Have you ever wondered what it would be like to have a chatbot that can run on your browser without any server support? Imagine being able to talk to a large language model (LLM) that can generate natural and engaging responses based on your instructions, while also respecting your privacy and saving you money. Sounds too good to be true, right?

Well, not anymore. Introducing WebLLM, an open-source project that brings LLM-based chatbots to web browsers. Everything runs inside the browser with no server support and accelerated with WebGPU. This opens up a lot of fun opportunities to build AI assistants for everyone and enable privacy while enjoying GPU acceleration.

What is WebLLM?

WebLLM is a project that aims to bring large language models (LLMs) and LLM-based chatbots to web browsers. LLMs are neural networks that can generate natural language texts based on some input, such as a prompt, a query, or an instruction. LLMs have been shown to produce impressive results in various natural language tasks, such as text summarization, question answering, text generation, and conversational agents.

However, LLMs are usually big and compute-heavy. To run them, you need a powerful GPU or a cloud service that can handle the inference workload. This means that you have to pay for the computation cost and also share your data with a third-party server. Moreover, you have to rely on a specific type of GPU or framework that supports the LLM of your choice.

WebLLM aims to overcome these limitations by bringing LLMs directly to the client side and running them inside a browser. This way, you can enjoy the benefits of LLMs without compromising your privacy or paying for extra computation. You can also run WebLLM on any device that supports a web browser and WebGPU, such as laptops, tablets, or smartphones.

How does WebLLM work?

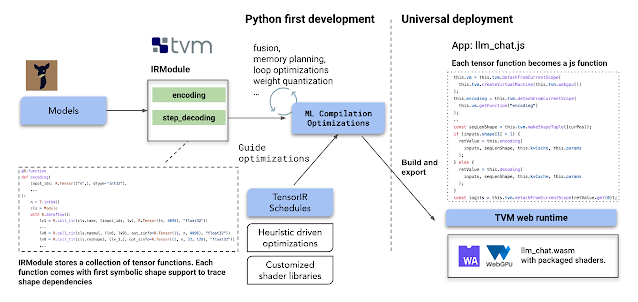

WebLLM leverages WebGPU, a new web standard that enables high-performance graphics and compute operations on the web. WebGPU exposes the GPU capabilities of the device to the browser, allowing web applications to run GPU-accelerated tasks such as rendering, machine learning, and gaming.

WebLLM uses WebGPU to run LLMs inside the browser using TensorFlow.js, a JavaScript library for machine learning. WebLLM supports various LLMs that are open-sourced by the LLaMA project, such as Alpaca, Vicuna, and Dolly. These LLMs are trained on large corpora of text and fine-tuned on specific tasks or domains using natural language instructions.

To use WebLLM, you just need to open a web page that hosts the chatbot demo. The chatbot will first fetch the model parameters into local cache. The download may take a few minutes, only for the first run. The subsequent refreshes and runs will be faster. Then, you can enter your inputs and click “Send” to start chatting with the LLM of your choice. The chatbot will generate responses based on your instructions and display them on the screen.

Why should you try WebLLM?

WebLLM offers several advantages over traditional chatbot services that rely on server-side inference. Here are some of them:

Privacy: By running LLMs inside the browser, WebLLM ensures that your data stays on your device and is not shared with any third-party server. You can chat with the LLM without worrying about data leakage or surveillance.

Cost: WebLLM eliminates the need for server-side computation and reduces the network bandwidth usage. You can chat without paying for extra cloud services or data plans.

Accessibility: WebLLM enables you to access LLMs from any device that supports a web browser and WebGPU. You can chat with the LLM from your laptop, tablet, or smartphone without installing any software or hardware.

Diversity: By running LLMs inside the browser, WebLLM allows you to choose from different LLMs that are fine-tuned on different tasks or domains using natural language instructions. You can chat as per your needs and preferences.

How can you get started with WebLLM?

To get started with WebLLM, users need to install Chrome Canary or Chrome 113, which support WebGPU, and launch it with a special command. If you are interested to know more and in trying out WebLLM, you can check out https://mlc.ai/web-llm/. You can also try its demo based on Vicuna-7b-v0 model. You can also check the GitHub repo of the project.

WebLLM is a promising project that brings the power of large language models and chatbots to web browsers. By leveraging WebGPU and TensorFlow.js, WebLLM enables users to chat with LLMs of their choice without compromising their privacy or paying for extra computation.

This offers the benefits of cost reduction, personalization and privacy protection. WebLLM opens up a lot of exciting opportunities to build personalized and domain-specific AI assistants that run entirely on the client side. With WebLLM, you can experience the benefits of LLMs without worrying about data privacy or cost. Try out WebLLM today and see how it can transform the way you interact with chatbots.

No comments: