Bloomberg's Custom Large Language Model for Finance: Meet BloombergGPT with 50 Billion Parameters

Highlights

- Bloomberg has unveiled a new large-scale generative artificial intelligence (AI) model, BloombergGPT.

- The large language model (LLM) is tailored to support a diverse range of natural language processing (NLP) tasks within the financial industry.

- Bloomberg's ML Product and Research group worked with the firm's AI Engineering team to create one of the most extensive domain-specific datasets, utilizing the company's existing data creation, collection, and curation resources.

- The team drew from 40 years' worth of financial language documents to build a comprehensive dataset of 363 billion English financial document tokens.

- The dataset was augmented with a 345 billion token public dataset to create a large training corpus of over 700 billion tokens.

- Using a portion of this corpus, the team trained a 50-billion parameter decoder-only causal language model, which was validated on existing finance-specific NLP benchmarks, a suite of Bloomberg internal benchmarks, and general-purpose NLP tasks from popular benchmarks.

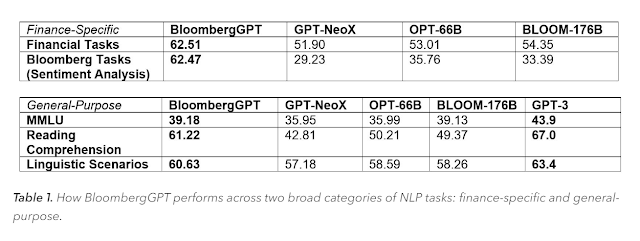

- BloombergGPT model has demonstrated superior performance on financial tasks compared to existing open models of similar size while performing equally well or better on general NLP benchmarks

Natural language processing (NLP) is a branch of artificial intelligence that deals with understanding and generating natural language texts. NLP has many applications in various domains, such as sentiment analysis, question answering, summarization, translation, and more. However, not all domains are equally well-served by the existing NLP models and methods. One such domain is finance, which has its own unique characteristics and challenges.

Finance is a complex and dynamic field that requires specialized knowledge and expertise. Financial texts are often rich in domain-specific terminology, jargon, abbreviations, and acronyms. They also contain numerical data, tables, charts, and graphs that need to be interpreted and integrated with the textual information. Moreover, financial texts are highly sensitive to the context and time of publication, as they reflect the current state and trends of the markets and the economy.

To address these challenges, Bloomberg has developed BloombergGPT, a large-scale language model (LLM) that is specifically trained on a wide range of financial data. BloombergGPT is a 50 billion parameter model that is based on the GPT-3 architecture (Brown et al., 2020), which uses a deep neural network with a transformer structure (Vaswani et al., 2017) to learn from large amounts of text data. BloombergGPT is trained on a 363 billion token dataset that is constructed from Bloomberg's extensive data sources, such as news articles, company reports, earnings transcripts, analyst notes, market data, and more. The dataset also includes 345 billion tokens from general purpose datasets, such as Wikipedia, Common Crawl, and BooksCorpus (Zhu et al., 2015), to ensure a broad coverage of topics and vocabulary.

BloombergGPT is designed to support various NLP tasks in the financial domain, such as information extraction, text classification, text generation, and text understanding. To evaluate its performance, Bloomberg has tested BloombergGPT on several standard LLM benchmarks, such as LAMBADA (Paperno et al., 2016), PIQA (Bisk et al., 2020), and ANLI (Nie et al., 2020), as well as on open financial benchmarks, such as FinBERT (Araci et al., 2020), FiQA (Nakov et al., 2018), and FinCausal (Mirza et al., 2020). In addition, Bloomberg has created a suite of internal benchmarks that reflect the real-world use cases of BloombergGPT within the company and its clients.

The results show that BloombergGPT outperforms existing models of similar size on financial tasks by significant margins without sacrificing performance on general LLM benchmarks. For example, BloombergGPT achieves an accuracy of 86.4% on FinBERT's sentiment analysis task, compared to 81.9% by GPT-3 with 45 billion parameters. Similarly, BloombergGPT achieves an F1-score of 77.9% on FiQA's answer extraction task, compared to 69.8% by GPT-3 with 45 billion parameters. On the other hand, BloombergGPT performs at or above average on general LLM benchmarks, such as LAMBADA (63.2%), PIQA (75.4%), and ANLI (67.1%).

BloombergGPT is a state-of-the-art language model that is purpose-built for finance. It leverages Bloomberg's rich and diverse data sources to learn from billions of tokens of financial texts. It demonstrates superior performance on various financial NLP tasks while maintaining a competitive level on general NLP tasks. BloombergGPT is a powerful tool that can enhance the capabilities and efficiency of financial professionals and researchers.

Bloomberg is a prominent player in the realm of business and financial information, offering dependable data, news, and insights that promote transparency, efficiency, and impartiality in markets. By means of reliable technological solutions, the organization facilitates the connection of influential communities within the worldwide financial ecosystem, empowering their clients to arrive at more informed decisions and cultivate improved collaboration.

To learn more about BloombergGPT, you can read the research paper here: https://www.bloomberg.com/company/press/bloomberggpt-50-billion-parameter-llm-tuned-finance/

No comments: